A Deep Dive Into Data Enrichment

In this next installment of the data science series, we tackle data enrichment, the dirty-work of data science.

Data Enrichment

In one of my old classes at NC State, we were working in Excel with a class survey on recycling usage of the student body. As expected, there were a few people who did not take it seriously (you recycle 2,684 bottles every day? Probably not). Our teacher made us go into the Excel datasheet and clean up the junk people had left. At a very basic level, this is data enrichment: taking the raw unstructured data and putting it into a format that is understandable and suitable for analysis.

Data wrangling is done by both humans and automated machines. Why not always use machines? Because humans are, at least currently, better at recognizing the subtleties of information such as sarcasm, or minute details in satellite pictures. That’s not to say that machine algorithms haven’t come a long way. IBM’s CRUSH predictive analytics software was able to reduce serious crime in Memphis by 30% and violent crime by 15% by forecasting criminal hotspots and allocating personnel.

For some more awesome algorithms, check out “The 10 Algorithms That Dominate Our World.” If you’re a more savvy with math and data science, also check out Marcos Otero’s “The real 10 algorithms that dominate our world.”

Extract Transform Load

This is the nitty gritty, Extracting, Transforming and Loading data. ETL as a process focuses on retrieving data from the storage source(s). Most of the time with data projects, multiple data sources are used with a variety of structures ranging from relational databases like SQL Server to non-relational databases such as information management systems, indexed sequential access method or virtual storage access method. All of these databases need varying amounts of processing to get the data ready for the target database.

API Connectors

APIs don’t transform data, they access data from a system using the exposed functionality as opposed to the UI. To better illustrate what an API is, take your laptop for example. The USB ports, headphone jack, speakers and video output are examples of APIs for your computer. If you aren’t satisfied with the internal speakers and screen size, you can connect to a bigger screen and use external headphones.

A more advanced example is Microsoft Azure Machine Learning API. Azure turns data scientist’s work from days into minutes once a feasible model is developed. It enables them to use predictive models in IoT applications by providing APIs for fraud detection, text analytics and recommendation systems.

Open Source Tools

The term Open Source denotes software for which the original source code is made freely available and may be redistributed and modified. The average computer user never sees the source code, but it is what developers use to manipulate and change a piece of software. With open source software, its authors made the source code available to anyone and this gives developers the ability to learn it and change it to fit the need of their applications. This promotes all kinds of development in this space, and in fact, when a change is made to the source code, the developer must share the source code and cannot charge a licensing fee.

Some of the most popular tools in data science are Hadoop, MapReduce, and HPCC. Hadoop is so popular that the terms “big data” and Hadoop” are almost synonymous. MapReduce is a programming model and software framework for writing applications that rapidly process vast amounts of data in parallel on large clusters of compute nodes. HPCC Systems (High Performance Computing Cluster) is an open source, massive parallel-processing computing platform for big data processing and analytics running in Linux with a free community version and paid enterprise solutions.

Data Integration

Data integration is similar to ETL, but it focuses on unifying the data from multiple applications as opposed to data generation.

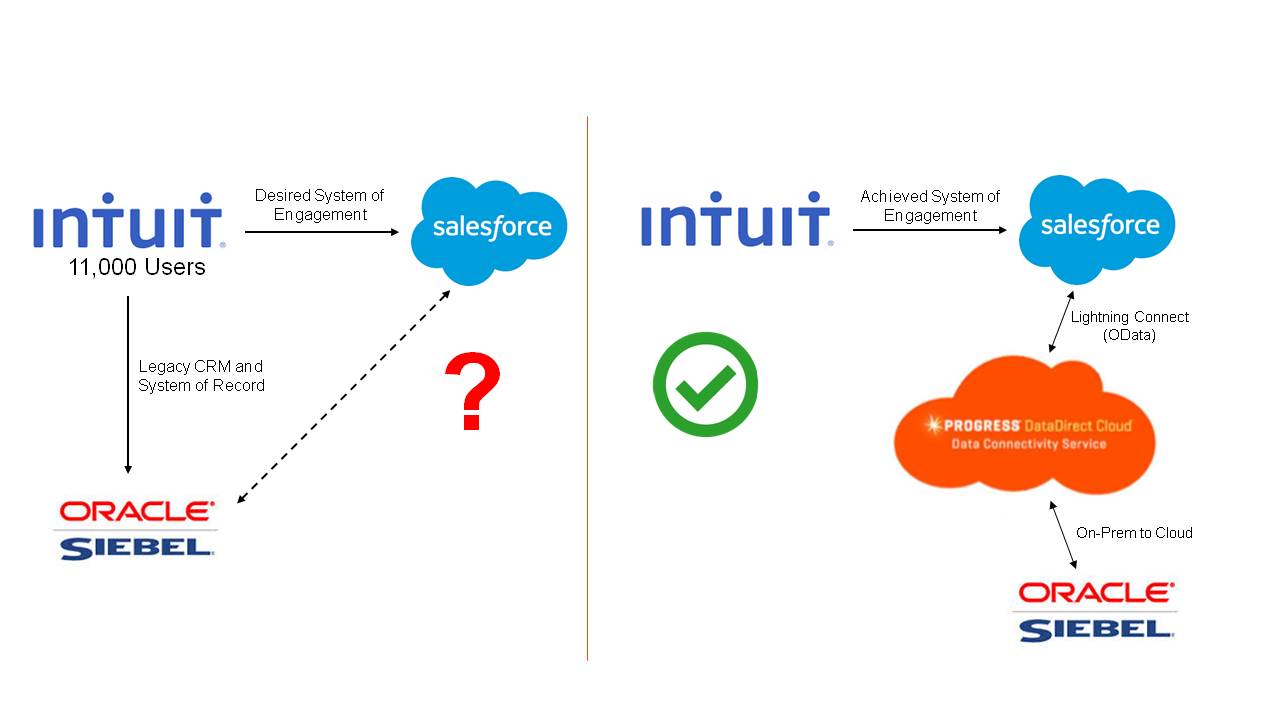

For example, Siebel was Intuit’s legacy CRM and system of record. When they wanted to switch to Salesforce, the sales team had to use Siebel and Salesforce separately to get all the data they needed. That’s when we stepped in using DataDirect Cloud and Salesforce Connect to create a single, unified user experience so that the sales team didn’t have to use Salesforce AND Siebel.

Maximize Your Data Connectivity

Here at Progress, we offer some of the most advanced data integration connectors available. Digital business transformation starts here as we like to put it! We enable anyone to rapidly develop and deliver applications that drive customer success. We offer connectors for every data source and have 24/7 award winning customer service for all of our solutions. Pick up a free trial today!

Suzanne Rose

Suzanne Rose was previously a senior content strategist and team lead for Progress DataDirect.

Next:

Comments

Topics

- Application Development

- Mobility

- Digital Experience

- Company and Community

- Data Platform

- Secure File Transfer

- Infrastructure Management

Sitefinity Training and Certification Now Available.

Let our experts teach you how to use Sitefinity's best-in-class features to deliver compelling digital experiences.

Learn MoreMore From Progress

Latest Stories

in Your Inbox

Subscribe to get all the news, info and tutorials you need to build better business apps and sites

Progress collects the Personal Information set out in our Privacy Policy and the Supplemental Privacy notice for residents of California and other US States and uses it for the purposes stated in that policy.

You can also ask us not to share your Personal Information to third parties here: Do Not Sell or Share My Info

We see that you have already chosen to receive marketing materials from us. If you wish to change this at any time you may do so by clicking here.

Thank you for your continued interest in Progress. Based on either your previous activity on our websites or our ongoing relationship, we will keep you updated on our products, solutions, services, company news and events. If you decide that you want to be removed from our mailing lists at any time, you can change your contact preferences by clicking here.